Overview

A multitude of important real-world datasets come together with some form of graph structure: social networks, citation networks, protein-protein interactions, brain connectome data, etc. Extending neural networks to be able to properly deal with this kind of data is therefore a very important direction for machine learning research, but one that has received comparatively rather low levels of attention until very recently.

|

|---|

| Motivating examples of graph-structured inputs: molecular networks, transportation networks, social networks and brain connectome networks. |

Here we will present our ICLR 2018 work on Graph Attention Networks (GATs), novel neural network architectures that operate on graph-structured data, leveraging masked self-attentional layers (Vaswani et al., 2017) to address the shortcomings of prior methods based on graph convolutions or their approximations (including, but not limited to: Bruna et al., 2014; Duvenaud et al., 2015; Li et al., 2016; Defferrard et al., 2016; Gilmer et al., 2017; Kipf and Welling, 2017; Monti et al., 2017; Hamilton et al., 2017).

Motivation for graph convolutions

We can think of graphs as encoding a form of irregular spatial structure—and therefore, it would be highly appropriate if we could somehow generalise the convolutional operator (as used in CNNs) to operate on arbitrary graphs!

CNNs are a major workforce when it comes to working with image data. They exploit the fact that images have a highly rigid and regular connectivity pattern (each pixel “connected” to its eight neighbouring pixels), making such an operator trivial to deploy (as a small kernel matrix which is slid across the image).

| 2D convolutional operator as applied to a grid-structured input (e.g. image). |

Arbitrary graphs are a much harder challenge! Ideally, we would like to aggregate information across each of the nodes’ neighbourhoods in a principled manner, but we are no longer guaranteed such rigidity of structure.

|

|---|

| A desirable form of a graph convolutional operator. |

Enumerating the desirable traits of image convolutions, we arrive at the following properties we would ideally like our graph convolutional layer to have:

- Computational and storage efficiency (requiring no more than \(O(V+E)\) time and memory);

- Fixed number of parameters (independent of input graph size);

- Localisation (acting on a local neighbourhood of a node);

- Ability to specify arbitrary importances to different neighbours;

- Applicability to inductive problems (arbitrary, unseen graph structures).

Satisfying all of the above at once has proved to be quite challenging, and indeed, none of the prior techniques have been successful at achieving them simultaneously.

Towards a viable graph convolution

Consider a graph of \(n\) nodes, specified as a set of node features, \((\vec{h}_1, \vec{h}_2, \dots, \vec{h}_n)\), and an adjacency matrix \(\bf A\), such that \({\bf A}_{ij} = 1\) if \(i\) and \(j\) are connected, and \(0\) otherwise1. A graph convolutional layer then computes a set of new node features, \((\vec{h}’_1, \vec{h}’_2, \dots, \vec{h}’_n)\), based on the input features as well as the graph structure.

Every graph convolutional layer starts off with a shared node-wise feature transformation (in order to achieve a higher-level representation), specified by a weight matrix \({\bf W}\). This transforms the feature vectors into \(\vec{g}_i = {\bf W}\vec{h}_i\). After this, the vectors \(\vec{g}_i\) are typically recombined in some way at each node.

In general, to satisfy the localisation property, we will define a graph convolutional operator as an aggregation of features across neighbourhoods; defining \(\mathcal{N}_i\) as the neighbourhood of node \(i\) (typically consisting of all first-order neighbours of \(i\), including \(i\) itself), we can define the output features of node \(i\) as:

\[\vec{h}'_i = \sigma\left(\sum_{j\in\mathcal{N}_i}\alpha_{ij}\vec{g}_j\right)\]where \(\sigma\) is an activation function, and \(\alpha_{ij}\) specifies the weighting factor (importance) of node \(j\)’s features to node \(i\).

Most prior work defines \(\alpha_{ij}\) explicitly2 (either based on the structural properties of the graph, or as a learnable weight); this requires compromising at least one other desirable property.

Graph Attention Networks

We instead decide to let \(\alpha_{ij}\) be implicitly defined, employing self-attention over the node features to do so. This choice was not without motivation, as self-attention has previously been shown to be self-sufficient for state-of-the-art-level results on machine translation, as demonstrated by the Transformer architecture (Vaswani et al., 2017).

Generally, we let \(\alpha_{ij}\) be computed as a byproduct of an attentional mechanism, \(a : \mathbb{R}^N \times \mathbb{R}^N \rightarrow \mathbb{R}\), which computes unnormalised coefficients \(e_{ij}\) across pairs of nodes \(i, j\), based on their features:

\[e_{ij} = a(\vec{h}_i, \vec{h}_j)\]We inject the graph structure by only allowing node \(i\) to attend over nodes in its neighbourhood, \(j \in \mathcal{N}_i\). These coefficients are then typically normalised using the softmax function, in order to be comparable across different neighbourhoods:

\[\alpha_{ij} = \frac{\exp(e_{ij})}{\sum_{k\in\mathcal{N}_i}\exp(e_{ik})}\]Our framework is agnostic to the choice of attentional mechanism \(a\): in our experiments, we employed a simple single-layer neural network. The parameters of the mechanism are trained jointly with the rest of the network in an end-to-end fashion.

Regularisation

To stabilise the learning process of self-attention, we have found multi-head attention to be very beneficial (as was the case in Vaswani et al., 2017). Namely, the operations of the layer are independently replicated \(K\) times (each replica with different parameters), and outputs are featurewise aggregated (typically by concatenating or adding).

\[\vec{h}'_i = {\LARGE \|}_{k=1}^K \sigma\left(\sum_{j\in\mathcal{N}_i}\alpha_{ij}^k{\bf W}^k\vec{h}_j\right)\]where \(\alpha_{ij}^k\) are the attention coefficients derived by the \(k\)-th replica, and \({\bf W}^k\) the weight matrix specifying the linear transformation of the \(k\)-th replica.

With the setup of the preceding sections, this fully specifies a Graph Attention Network (GAT) layer!

| A GAT layer with multi-head attention. Every neighbour \(i\) of node \(1\) sends its own vector of attentional coefficients, \(\vec{\alpha}_{1i}\) one per each attention head \(\alpha_{1i}^k\). These are used to compute \(K\) separate linear combinations of neighbours’ features \(\vec{h}_i\), which are then aggregated (typically by concatenation or averaging) to obtain the next-level features of node \(1\), \(\vec{h}’_1\). |

Furthermore, we have found that applying dropout (Srivastava et al., 2014) to the attentional coefficients \(\alpha_{ij}\) was a highly beneficial regulariser, especially for small training datasets. This effectively exposes nodes to stochastically sampled neighbourhoods during training, in a manner reminiscent of the (concurrently published) FastGCN method (Chen et al., 2018).

Properties

A brief analysis of the properties of this layer reveals that it satisfies all of the desirable properties for a graph convolution:

- Computationally efficient: the computation of attentional coefficients can be parallelised across all edges of the graph, and the aggregation may be parallelised across all nodes;

- Storage efficient: It is possible to implement a GAT layer using sparse matrix operations only, requiring no more than \(O(V+E)\) entries to be stored anywhere;

- Fixed number of parameters, irrespective of the graph’s node or edge count;

- Trivially localised, as we only attend over neighbourhoods;

- Allows for (implicitly) specifying different importances to different neighbours;

- Readily applicable to inductive problems, as it is a shared edge-wise mechanism and therefore does not depend on the global graph structure!

To the best of our knowledge, this is the first proposed graph convolution layer to do so.

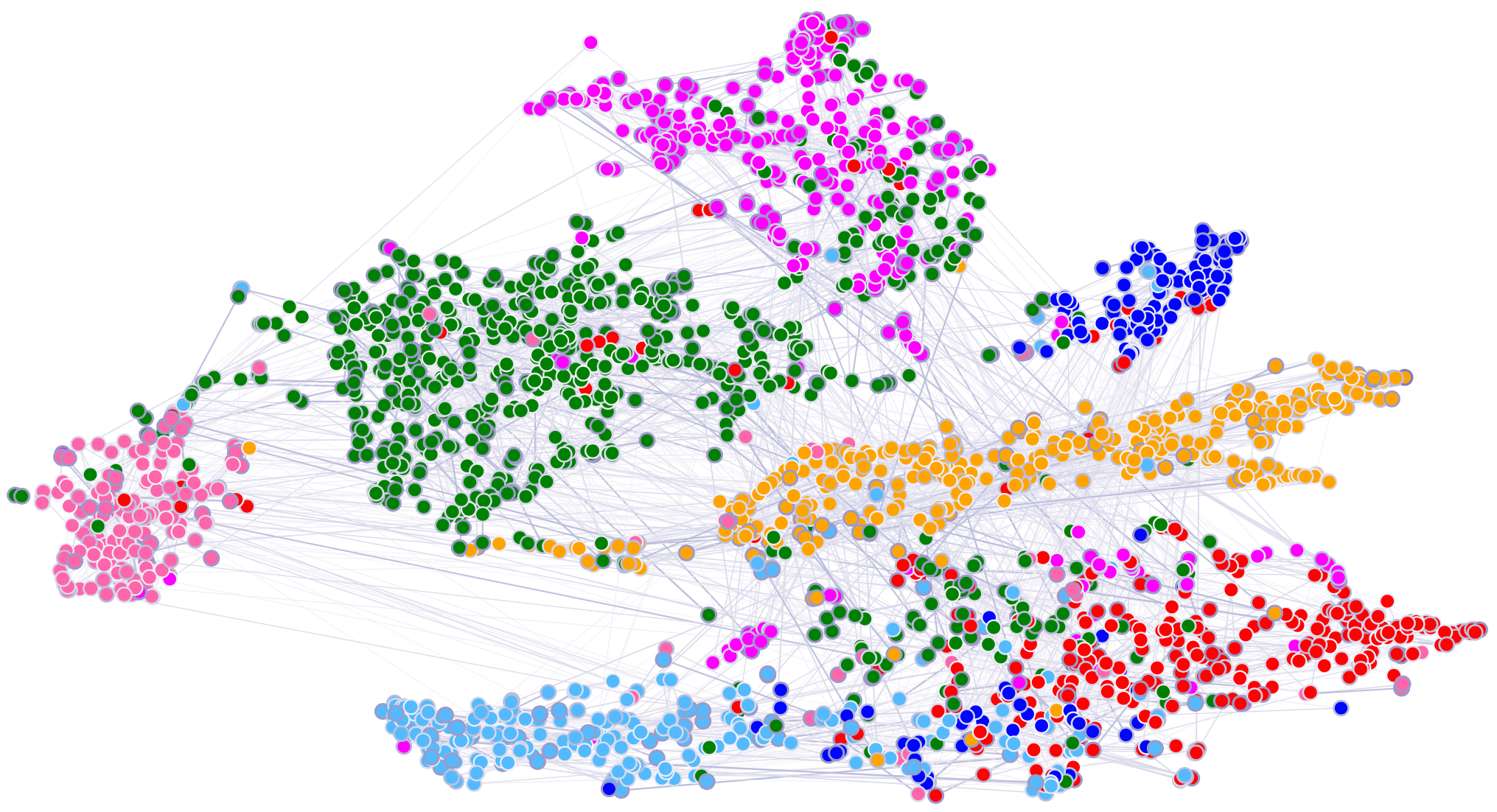

These theoretical properties have been further validated within our paper by matching or exceeding state-of-the-art performance across four challenging transductive and inductive node classification benchmarks (Cora, Citeseer, PubMed and PPI). t-SNE visualisations on the Cora dataset further demonstrate that our model is capable of effectively discriminating between its target classes.

|

|---|

| t-SNE + Attentional coefficients of a pre-trained GAT model, visualised on the Cora citation network dataset. |

Applications

Following the publication of the GAT paper, we have been delighted to witness (as well as contribute to) several new lines of research in which GAT-like architectures have been leveraged to solve challenging problems. Here, we will highlight two subsequent contributions that some of us have developed, and outline some works that have been released by others.

Mesh-based parcellation of the cerebral cortex

In this work (done in collaboration with the University of Cambridge Department of Psychiatry and the Montréal Neurological Institute), we have considered the task of cortical mesh segmentation (predicting functional regions for locations on a human brain mesh). To do this, we have leveraged functional MRI data from the Human Connectome Project (HCP). We found that graph convolutional methods (such as GCNs and GATs) are capable of setting state-of-the-art results, exploiting the underlying structure of a brain mesh better than all prior approaches, enabling more informed decisions.

|

|---|

| Area parcellation qualitative results for several methods on a test subject. The approach of Jakobsen et al. (2016) is the prior state-of-the-art. |

For more details, please refer to our MIDL publication (Cucurull et al., 2018).

Neural paratope prediction

Antibodies are a critical part of the immune system, having the function of directly neutralising or tagging undesirable objects (the antigens) for future destruction. Here we consider the task of paratope prediction: predicting the amino acids of an antibody that participate in binding to a target antigen. A viable paratope predictor is a significant facilitator to antibody design, which in turn will contribute to the development of personalised medicine.

In this work, we build on Parapred, the previous state of the art approach of Liberis et al. (2018), substituting its convolutional and recurrent layers with à trous convolutional and attentional layers, respectively. These layers have been shown to perform more favourably, and allowed us to, for the first time, positively exploit antigen data. We do this through cross-modal attention, allowing amino acids of the antibody to attend over the amino acids of the antigen (which could be seen as a GAT-like model applied to a bipartite antibody-antigen graph). This allowed us to set new state-of-the-art results on this task, along with obtaining insightful interpretations about the model’s mechanism of action.

|

|

|---|---|

| Antibody amino acid binding probabilities to the antigen (in gold) assigned by our model for a test antibody-antigen complex. Warmer colours indicate higher probabilities. | Normalised antigen attention coefficients for a single (binding) antibody amino acid (in red). Warmer colours indicate higher coefficients. |

For more details, please refer to our ICML WCB publication (Deac et al., 2018).

Additional related work

Finally, we outline four interesting relevant pieces of work that leverage or further build on GAT(-like) models. The list is by no means exhaustive!

- Gated Attention Networks (GaAN) (Zhang et al., 2018), where gating mechanisms are inserted into the multi-head attention system of GATs, in order to give different value to different heads’ computations. Several challenging baselines are outperformed on both inductive node classification tasks (Reddit, PPI) and a traffic speed forecasting task (METR-LA).

- DeepInf (Qiu et al., 2018), leveraging graph convolutional layers to modelling influence locality (predicting whether a node will perform a particular action, given the action statuses of its \(r\)-hop neighbours at a particular point in time). Notably, this is the first study where attentional mechanisms (GAT) appear to be necessary for surpassing baseline approaches (such as SVMs or logistic regression), given the heterogeneity of the edges. Furthermore, a very nice qualitative analysis is performed on the action mechanism of the various attention heads employed by the GAT model.

- Attention Solves Your TSP (Kool and Welling, 2018), where GAT-like layers (using the Transformer-style attention mechanism) have been successfully applied to solving combinatorial optimisation problems, specifically the Travelling Salesman Problem (TSP).

- PeerNets (Svoboda et al., 2018), which augment a standard convolutional neural network architecture for image classification with GAT-like layers over a graph of “neighbouring” feature maps from related images in a training dataset. This makes the learnt feature maps substantially more robust, providing a strong defence against a variety of adversarial attacks while sacrificing almost no test accuracy.

Conclusions

We have presented graph attention networks (GATs), novel convolution-style neural networks that operate on graph-structured data, leveraging masked self-attentional layers. The graph attentional layer utilised throughout these networks is computationally efficient (does not require costly matrix operations, and is parallelisable across all nodes in the graph), allows for (implicitly) assigning different importances to different nodes within a neighborhood while dealing with different sized neighborhoods, and does not depend on knowing the entire graph structure upfront—thus addressing many of the theoretical issues with approaches. Results, both within our work and the numerous subsequently published work, highlight the importance of such architectures towards building principled graph convolutional neural networks.

Citation

If you make advantage of the GAT model in your research, please cite the following:

@article{

velickovic2018graph,

title="{Graph Attention Networks}",

author={Veli{\v{c}}kovi{\'{c}}, Petar and Cucurull, Guillem and Casanova, Arantxa and Romero, Adriana and Li{\`{o}}, Pietro and Bengio, Yoshua},

journal={International Conference on Learning Representations},

year={2018},

url={https://openreview.net/forum?id=rJXMpikCZ},

}

Acknowledgements

We would like to thank Andreea Deac, Edgar Liberis and Thomas Kipf for their helpful feedback on prior iterations of this blog post!

-

In general, the adjacency matrix may be weighted, contain edges of different types, or the edges may even have features of their own. We do not consider these cases for simplicity, but the GAT model may be trivially extended to handle them, as was done by EAGCN (Shang et al., 2018). ↩

-

A notable exception is the MoNet framework (Monti et al., 2017) which our work can be considered an instance of. However, in all of the experiments presented in this paper, the weights were implicitly specified based on the graph’s structural properties, meaning that two nodes with same local topologies would always necessarily receive the same (learned) weighting—thus still violating one of the desirable properties for a graph convolution. ↩